Creating the Other in Online Interaction: Othering Online Discourse Theory

The Emerald International Handbook of Technology-Facilitated Violence and Abuse

ISBN: 978-1-83982-849-2, eISBN: 978-1-83982-848-5

Publication date: 4 June 2021

Abstract

The growth of online communities and social media has led to a growing need for methods, concepts, and tools for researching online cultures. Particular attention should be paid to polarizing online discussion cultures and dynamics that increase inequality in online environments. Social media has enormous potential to create good, but in order to unlock its full potential, we also need to examine the mechanisms keeping these spaces monotonous, homogenous, and even hostile toward some groups. With this need in mind, I have developed the concept and theory of othering online discourse (OOD).

This chapter introduces and defines the concept of OOD and explains the key characteristics and different attributes of OOD in relation to other concepts that deal with disruptive and discriminatory behavior in online spaces. The attributes of OOD are demonstrated drawing on examples gathered from the Finnish Suomi24 (Finland24) forum.

Keywords

Citation

Vaahensalo, E. (2021), "Creating the Other in Online Interaction: Othering Online Discourse Theory", Bailey, J., Flynn, A. and Henry, N. (Ed.) The Emerald International Handbook of Technology-Facilitated Violence and Abuse (Emerald Studies In Digital Crime, Technology and Social Harms), Emerald Publishing Limited, Leeds, pp. 227-246. https://doi.org/10.1108/978-1-83982-848-520211016

Publisher

:Emerald Publishing Limited

Copyright © 2021 Elina Vaahensalo. Published by Emerald Publishing Limited. This chapter is published under the Creative Commons Attribution (CC BY 4.0) licence. Anyone may reproduce, distribute, translate and create derivative works of these chapters (for both commercial and non-commercial purposes), subject to full attribution to the original publication and authors. The full terms of this licence may be seen at http://creativecommons.org/licences/by/4.0/legalcode.

License

This chapter is published under the Creative Commons Attribution (CC BY 4.0) licence. Anyone may reproduce, distribute, translate and create derivative works of these chapters (for both commercial and non-commercial purposes), subject to full attribution to the original publication and authors. The full terms of this licence may be seen at http://creativecommons.org/licences/by/4.0/legalcode.

Introduction

Nowadays, people looking for information are almost completely dependent on the internet. Various groups and communities are formed in online environments and social media discourses play a large role in that process. In addition to the potential for widespread distribution, online conversations, ideas, and discourses can be powerful and meaningful. The downside is that easy access to both information and communications makes the dissemination of ideas easier and hate speech and fake information find their way more stealthily to the hands of internet users (Citron, 2014; Meddaugh & Kay, 2009; Weaver, 2013).

With the growing popularity of social media, inequality and discrimination in online spaces has received increasing attention. However, well-known and frequently used concepts like hate speech, cyberbullying, online misogyny, and online racism are often either impractically vague or impractically narrow to describe the complex ways in which “othering” speech unfolds in online contexts. The problem with the concept of hate speech, for instance, is that it is not confined to online environments, which means that the concept does not take into account the specific characteristics of online interaction, which range from complete anonymity to the different power structures that affect online communities. Hate speech is a multipurpose umbrella term used to describe expressions of hate and hostility regardless of the environment in which they occur. Public speeches, political demonstrations, radio broadcasts, and blog posts can all be defined as hate speech (Waldron, 2012, p. 34; Walker, 1994). The concept of hate speech has also become increasingly commonplace in everyday speech, which has led to subjective perceptions of what hate speech constitutes. Ruotsalainen (2018) states that hate speech has become a rhetorical trope and a populistic tool, which is often used to obscure the actual matter discussed. She notes that hate speech has become a “floating signifier, which avoids strict definition” and is “emptied” from its history and its connotation with racism (Ruotsalainen, 2018, p. 226). Waldron (2012, p. 1,601) further notes that hate speech is often misunderstood as “thinking” rather than communicating, and thus freedom of speech advocates argue that restrictions on speech (including hate speech) is tantamount to censoring thought and freedom of expression. On the other hand, conceptual narrowness is encountered especially when online discourse is examined from the perspective of racism or misogyny. While both concepts are important tools for examining inequality, researchers need concepts that are not limited to race, ethnicity, nationality, or gender. Discourses against inclusion also rely on, for example, socioeconomic background and age.

To address these conceptual and terminological deficiencies, I created the concept and theory of “othering online discourse” (OOD) that can be used to examine polarizing online interaction as a combination of public identity speech and online gatekeeping. By identity speech, I mean expressions of one's identity via online interaction. Especially in anonymous online conversations, identity is often showcased by describing traits and opinions that are hoped to ignite a sense of sameness and social cohesion (see, e.g., Chayko, 2002; Litt & Hargittai, 2016). In this chapter, I argue first that these same dynamics can produce not only sameness, but also otherness and exclusion. Second, I argue that by combining elements from concepts such as hate speech, online racism, and online misogyny, one can create a tool that allows a flexible view on discursive exclusion in online spaces. With the help of the OOD theory, I seek to demonstrate how othering and discursive exclusivity are created from different starting points, by different discursive means, and for different purposes.

In this chapter, I define the concept of OOD, explain the key characteristics of OOD, and demonstrate the flexibility of the theory by introducing different kinds of attributes of OOD that can be used to analyze, describe, and decode any kind of online interaction. To demonstrate the attributes, I provide examples gathered from the Finnish Suomi24 (Finland24) forum. In the Finnish media, Suomi24 is often referred to as an antisocial and cruel environment that hosts a community of online bullies (Vaahensalo, 2018). The same rhetoric is frequently used to describe other anonymous online environments such as the infamous imageboard 4chan or the content rating website Reddit (Arntfield, 2015; ElSherief, Kulkarni, Nguyen, Wang, & Belding, 2018; Miller, 2015; Vainikka, 2018). However, while othering behavior in online environments is often an unpleasant, hostile, and antisocial phenomenon, its power lies specifically in its sociality: “othering” discourses are produced in a public environment for the purpose of others seeing it and interacting with it. Interaction in online environments also needs to be seen in its own original context. In some online communities, generally disapproved behavior may well have the effect of fostering a sense of community.

In the first section of the chapter, I review the concepts of otherness and othering in relation to the study of online discussions and social media. In the second section, I explain further what kind of online space Suomi24 forum is. I also clarify how I gathered the examples of OOD, and what kind of methods I used for constructing the theory and concept of OOD. In the third section, I review the different ethical issues that define the research of hostile online discussion as well as the utilization of the concept of otherness in research. The fourth section of the chapter is dedicated for the more extensive definition of the concept of OOD and the key characteristics that differentiate OOD from other concepts that deal with discriminatory behavior in online spaces. In the final section of the chapter, I examine the attributes of OOD that are demonstrated with different quotations collected from the Suomi24 forum. These attributes describe how online othering relates to the topic of the discussion, what kind of repercussions othering can have, and how it will appear to the reader.

A Theory of Othering

The concept of otherness refers to the dichotomy between the self and the other; between the “us” and the “them.” Ideas of similarity and sameness are used to construct the idea of “us,” but simultaneously conceptions of difference are used to construct “them” (Bauman & May, 2004, pp. 30–35). “The other” can be seen as a building block for the self, self-image, and identity. Okolie (2003) states that identity has little meaning by its own. Identity and a sense of self are thus acquired and claimed by defining the other. Consequences of claiming identities usually reflect an imbalance of power, since groups do not have equal powers to define both self and the other (Okolie, 2003, p. 2). That is why otherness is often determined or dictated by those who are in a position of power (Jensen, 2011; Miles, 1989; Pickering, 2001). By referring to position of power, I do not necessarily mean a position of literal political power. The power play of othering operates especially through production of culture, knowledge, and representations (Hall, 1997). For example, in anonymous online discussions, power usually falls into the hands of those who support norms of maleness and whiteness (Phillips, 2019). Popularity, social cohesion, and power are more easily available in anonymous online environments if participants reproduce widely accepted norms.

The process of othering is a key part of the OOD theory. Othering is a process where individuals are classified into the hierarchical groups of “them” and “us.” This often happens by stigmatizing and simplifying differences. Hence, the self or the “us” gives itself identity by setting itself apart from the other (Staszak, 2008, p. 2). One motivation for establishing these kinds of twofold categories is to achieve a sense of social belonging by excluding the undesired and the other. This process produces imbalanced narratives, where some identities are marked as superior and some inferior (Jensen, 2011). Robert Miles (1989, p. 61) points out that by classifying an individual or group of people as the other, the classifier also defines the criteria for how themselves come to be represented. Through this asymmetric process, the superior maintains their position and at the same time seeks to control the formation of the identity of the inferior group.

According to Jensen (2011), the history of the concept of otherness can be roughly divided into three stages, through which the concept has evolved and taken shape in the research literature. The first step can be seen in G.W.F. Hegel's conception of the master–slave dialectic presented in the Phenomenology of Spirit published in 1807. In Hegel's theory, the juxtaposition toward the other constitutes the self. Simone de Beauvoir (1997) has universalized the theory in relation to both gender and other hierarchical social differences, with men being regarded as the norm and women as the “other.” The next stage can be found in the post-colonial writing of literary scholar Edward Said (1995), who theorized about how the West created orientalist images of the strange, exotic, and above all, of the “other” East. A third stage is exemplified by psychoanalyst Jacques Lacan's (1966) theory on the “little other” (the mirrored/projected self/ego) and the “big Other” (the non-self “other”). According to Lacan (1966), the “big Other” is the symbolic order through which language, discourse, and interaction play a significant role in the formation of self, identity, and the construction of reality (pp. 93–100).

The formation of identity in the dialogue between self and the other shows that the mind and identity are formed as part of an interactive and intersubjective process (Benjamin, 1990). According to Patrick Jemmer (2010, p. 22), the act of othering and the unequal and asymmetric relationship between the self and the other might even be seen as a natural and unavoidable result of social mechanics of intersubjectivity. This phenomenon is, of course, also reflected in online environments, where people negotiate hierarchies, positions of power, and the identities that settle into them. Intersubjectivity is a process of sharing experiences and emotions, and it is inevitably reflected in online discussions as well. Comments on a discussion thread are produced in relation to previous comments, allowing participants to express their interpretations of each other's thoughts and the topic of discussion.

Stuart Hall (2003) argues that globalization has led people to look at their own cultural identity and its relationship to other cultures. The internet has been one of the most powerful engines of globalization, enabling new encounters and visibility for many marginalized groups. Collisions with “new” types of identities may well be one of the reasons why some participants in online conversations have a strong need to underline the superiority of their own identity and emphasize what represents the desired norm. On the other hand, it should also be noted that discriminatory discourses are not a new phenomenon in online environments. Networks have been used to disseminate hate and prejudice even before the widely popular social media platforms like Twitter or Facebook (see, e.g., Back, 2002; Massanari, 2017; Phillips, 2019). However, online communication has become more commonplace and, as a result, the handling of social and political issues in social media has become more widespread and, above all, more visible.

Suomi24 Forum as an Anonymous Community of Communities

Suomi24 forum is one of Finland's largest online topic-centric discussion forums and one of the largest non-English online discussion forums in the world. The site has operated under the same name since 2000 and was originally established in 1998. Suomi24 allows users to engage in discussion anonymously, without registration. The topics vary from everyday life to politics. The number of subforums in Suomi24 has varied over time but over a 20-year period, it has ranged from 20 to 30. Under those subforums, there are even more specialized and defined subcategories, the number of which can at best be up to several thousand. In a way, Suomi24 is a community of smaller communities. Users of Suomi24 cannot create subcategories themselves, but the administrators take user suggestions into consideration when creating new categories (Lagus, Ruckenstein, Pantzar, & Ylisiurua, 2016, p. 6; Suominen, Saarikoski, & Vaahensalo, 2019, pp. 11–15).

Suomi24 is an open, anonymous, and low-threshold forum intended for “all Finns” to participate in. However, the ideal of equal participation cannot be achieved if the forum is used to disseminate hate. Anonymous discussion is often more uninhibited and open (Papacharissi, 2004, p. 267; Suler, 2004, pp. 321–322). Massanari (2017, p. 331) suggests that when the threshold of participation is low and the interaction is anonymous – or pseudonymous – the interactions tend to feature elements of play that might not be as visible or common in social media spaces that enforce “real name” participation. The openness of the Suomi24 forum makes it an interesting subject for research because all forms of disruptive behavior in online communication benefits from technologies that make it easier to engage in confrontations and disseminate discriminatory ideas.

Method

In this study, I collected examples to demonstrate the different attributes of OOD from 66 discussion threads from six different subcategories in Suomi24. The topics for these six categories were patriotism; developing countries and development aid; poverty; world affairs; trans people; and Romani people. Most of these subcategories have a specific topic or they are targeted to a specific kind of users, but the world affairs forum is a general forum for discussion that deals with both domestic and international news.

I approached the concept of OOD by layering different qualitative methods that acknowledge the social, cultural, and technological dimensions of OOD. This combination of methods can also be called triangulation, according to its broader definition (Denzin, 1978; Jick, 1979). The methodological tools for this research were concept analysis; practices of online ethnography; and critical discourse analysis.

First, concept analysis is an activity where concepts and their characteristics are clarified and described (Nuopponen, 2010). The definition of OOD was done by applying an eight-step concept analysis model (Puusa, 2008), based on the model originally presented by John Wilson (1963). These eight steps consist of identifying the origin of the concept; setting goals for analysis; specifying different interpretations and uses of the concept; identifying the defining characteristics of the concept; developing a model case of the concept; reviewing related concepts; describing the attendants and consequences of the concept; and naming empirical referents. In particular, the most critical role in defining OOD was differentiating it from other similar concepts, such as online racism, cyberbullying, and hate speech.

OOD is a concept mainly for research use, but the phenomenon itself does not exist as a mere concept and it cannot be definitively defined without the study of the actual online discussions. Therefore, the second approach utilized in this study was an online ethnographic approach which facilitated the observing and collecting of examples of OOD. Observing online discussion and collecting data do not necessarily fall completely within the definition of online ethnographic research, especially if the researcher does not interact with the forum participants that are being observed. Hine (2015, pp. 157–161) describes this kind of approach as an unobtrusive method that utilizes generalized ethnographic strategies without any actual interaction. Especially in a media ethnographic-focused study, the researcher's participation is not perceived solely as an interaction with the informants. In digital environments such as an online forum, the visitor is rarely a mere observer. The visitor interacts with the site through the user interface and by clicking on links and using search engines. All these acts and interactions leave a digital footprint that affects the observation of the discussions and the data collection (Laaksonen et al., 2017; Sumiala, 2012).

The third tool used in this study was critical discourse analysis. Critical discourse analysis is not a method in itself but serves as a framework through which different power relations and language-supported social practices are explored (Wodak, 1999). In this case, critical discourse analysis is a tool for addressing the often subtle nature of OOD. Nikolas Coupland (2010, p. 247) points out that othering may not be noticeable from the “surface” of the text. Occasionally, speech that is intended to be divisive is indirect, and in order to comprehend it, it is necessary to understand the social context of the speech. Discourse analysis is not just a superficial analysis of text, but also an analysis of contexts and interactions (Fairclough, 2003; Van Dijk, 2000). Because of the indirect and potentially subtle nature of othering discourse, a discourse analytical perspective helps to contextualize online interactions. Comments posted online can be taken out of context and viewed as stand-alone texts, but then the communication between separate texts might be ignored.

The Ethics of Studying Hateful Online Discourse

The ethics of studying hateful online interaction can be divided into two different main issues: How to protect the privacy of subjects participating the online discussion; and how to address the explicit and hostile comments in the discussion threads.

Guidelines for online research have been provided by, among others, the Association of Internet Researchers (AoIR). The guidelines take into account the intricacies of online research, such as assessing possible harm to the subject. In cases of web-based forums, such as Suomi24, AoIR guidelines advise to take notice of the expectations for privacy and publicity in that forum and whether the participants would consider the information shared sensitive. According to the guidelines, it is also important to recognize personally identifiable information in the potential data and address risks to individual privacy accordingly (Markham & Buchanan, 2012, p. 11). In the case of the Suomi24 forum, the discussions are public and accessible to everyone. It is important to remember that publicity does not make any data just “free game,” which could be uncritically gathered for use (Zimmer, 2018). However, the publicity of the forum is known to the users themselves, and partly for this reason discussions often take place behind unregistered nicknames. Due to the public nature of the discussions and the anonymity of the commenters, the consent of the users contained in the data has not been collected in this study. Comments in their original context are intended to be anonymous and visible for all internet users, and commenters have not participated in the discussions under their own names or otherwise personally identifiable information. I have also made sure that among the comments collected, there is not identifiable, sensitive, or private information and no individuals are recognizable in the examples presented in this chapter. The discussion material collected is originally in Finnish, and translating the comments into English has also acted as anonymization.

While protecting forum participants is an important ethical component of this research, one must also be aware of the problems that arise from using the concept of otherness. Criticism has been directed specifically toward the potentially simplifying effect of the concepts of othering and otherness (Gingrich, 2004; Jensen, 2011, p. 66). Utilizing these concepts often requires a usage of different kinds of social categories and that in turn may highlight intragroup differences and asymmetry even further. Unreflective use of social categories can lead to harmful oversimplification and overgeneralization (Dervin, 2015, pp. 7–8; Gillespie, Howarth, & Cornish, 2012, pp. 399–400). Another significant pitfall in the study of otherness is the reproduction of racializing or other possibly offensive discourses. By examining discriminatory discourses, the researcher might give a voice to hateful discourses that do not deserve to be heard.

However, dismantling hateful dynamics in online communities is impossible if the dark side of internet communication is left in the shadows. Jane (2014), who has explored hostile misogynist rhetoric on the internet, suggests that explicit online hate needs to be explored and made visible because only in that way can their widespread impact be truly understood (see also Jane, this volume). Jane (2014) also states that the anonymous producers of hateful discourses are likely to benefit from the fact that the most violent side of social media is often considered too detestable to be repeated in an academic context. In this chapter, I similarly highlight how blatantly violent OOD can be and in that way also make visible the destructive effect it can have on equality in online spaces, drawing on explicit examples of OOD.

Definition and Key Characteristics of Othering Online Discourse

OOD is a social process of online interaction and is often about exploiting public visibility of an online space to define and reinforce the polarizing dichotomy between “us” and “them.” OOD is also a way to express whose participation in the conversation is wanted and whose is unwanted. By deeming someone's participation as unwanted, producers of othering discourses strive to create spaces that accept the presence and visibility of only certain types of identities.

As a phenomenon, OOD aligns and intertwines with the broad framing of “internet culture,” that Phillips (2019) describes as a discursive category that often reproduces the norms of whiteness and maleness. Phillips argues that by reproducing these norms in the forms of racist and misogynistic content, internet culture has restricted participation to online interactions only for those who have, in one way or another, accepted these norms. From this viewpoint, OOD can be seen as a form of internet gatekeeping that strives to hold on to these norms and as its worst, dehumanizes groups that may not be in an equal position to defend themselves. In this section, I have listed seven key characteristics that differentiate OOD from other similar concepts.

Not a Monologue

OOD occurs in conversations and situations that are open for commenting. One-way individual texts (such as blog posts, news texts, or online videos) can also have an othering effect, but they are not able to communicate with their recipients in the same way as comments made in an online forum or a social media platform. This distinguishes OOD from, among other things, the concept of hate speech, which, in its multidimensionality, can occur both in interactional and individual texts.

As I stated earlier, OOD is about sociality. In some online spaces, even the most incivil othering and adherence to hateful narratives conform to communal norms (Vainikka, 2018; Watt, Lea, & Spears, 2002, p. 73). For instance, incivil correspondence among online forum participants takes the form of a back-and-forth banter in which commenters compete with each other to see who can create the cruelest way to describe the other (see, e.g., Jane, 2014, p. 560). At their worst, communally shared hateful narratives and norms can even propagate behaviors that are ultimately exploitative or violent (see, e.g., Quayle, Vaughan, & Taylor, 2006).

A Public Forum

Participants of OOD are aware of the interactivity and publicity of the conversation. Not all online conversations are truly interactive, but a comment posted on a discussion forum is by definition intended to be part of social interaction. By publicity, I mean in this case that the interaction is visible and open to an audience. Some online communities meet in more closed spaces that require registration to participate. Othering discourses are not limited to social media spaces that are open for everyone regardless of registration. However, hateful discourses naturally gain a wider audience, if they can be found by everyone.

Arguments about Humanity

Othering discourses are particularly present in discussions that concern identities and humanity in general. This does not mean that OOD is not to be found in discussions circulating around, for example, cars, pets, or travel. However, it is clear that prejudiced ideas about identities are brought up in forums where the topics of the discussions deal prominently with identity, humanity, and the policies affecting them (Hmielowski, Hutchens, & Cicchirillo, 2014, p. 1,197; Papacharissi, 2004, p. 278). The topic of the discussion does not need to be particularly flammable but an emotive or polarizing topic that emphasizes some kind of conflict can easily create a cycle of othering in which one's own “normative” identity and opinions are defended by pushing down the supposed other (see, e.g., Coffey & Woolworth, 2004, p. 12).

An Intersectional Phenomenon

Dynamics of online interaction needs to be looked at with concepts that are not restricted by gender or ethnicity. As a framework, intersectionality helps to reveal how power works in diffuse through the creation of overlapping identity categories (see, e.g., Cho, Crenshaw, & McCall, 2013). Just as in real life, countless marginalized groups are affected by online discrimination and harmful power structures. A variety of different aspects of identity and social status influence how people are talked about and how people are encountered in social media. The diversity of online othering has been acknowledged in many studies of online racism and hate speech (Atton, 2006; Back, 2002; Baumgarten, 2017). It is therefore important that research of online discrimination and technology-facilitated violence can also be approached with concepts that take into account the intersectionality of online discrimination.

It is About Groups

Cyberbullying or online bullying is targeted and often focused on individuals or groups whose members are individually identifiable. This also applies to hate speech, which can also be directed at individuals. The definition of cyberbullying also emphasizes the repetition of harassment, which makes the activity very personal and deliberate (Hinduja & Patchin, 2010, p. 206). Cyberbullying and personally directed hate speech can meet the definition of OOD if the bullying is justified with group characteristics, such as ethnicity, gender, nationality, or socioeconomic background. As a general rule, OOD is about generalization and categorization instead of personal harassment.

Subtle and Cloaked

Othering, whether online or offline, is not always openly hostile or aggressive. Stereotypical views and comments of the other can range from innocent concerns and fears to genuine and open hostility (Pickering, 2001, p. 71). Teun A. Van Dijk (2000) describes a subtle form of discriminatory speech as “new” racism which is typically not overtly violent or hostile. Often described as “merely speech,” this kind of racism pervades everyday life from ordinary conversations to television shows and schoolbooks and is just as effective in marginalizing groups of people (Van Dijk, 2000, p. 34).

The sometimes subtle nature of racism is also acknowledged in the framework of critical race theory. Critical race theory recognizes that racism is deeply rooted in the fabric and system of societies (Delgado, Stefancic, & Harris, 2017). OOD draws on this view of deeply rooted discrimination since power relations established in society are also reflected in online interactions. Humor is also used to convey stereotyping and marginalizing ideas (see, e.g., Coupland, 2010, p. 254; Hughey & Daniels, 2013, pp. 334–335; Malmqvist, 2015, pp. 748–749.) At times, it is also common for different hostile groups to seek credibility by using more subtle language and referring to terms such as “community” and “identity” rather than hardcore racist terminology (Atton, 2006, p. 576).

A Discursive Counterbalance

Massanari (2017) describes discriminatory and hostile cultures that are distributed via social media, with the term “toxic technocultures” (p. 330). These cultures often rely on harassment, pushing against ideas of diversity and othering of marginalized groups (Massanari, 2017). OOD could be seen as part of the process of forming such hostile cultures and communities, but OOD is also part of group formation in online communities that may not meet Massanari's (2017) definition of “toxic technocultures.” OOD is not always a conscious act of harassment and can also be used as an act of resistance against such toxic cultures. Sometimes OOD is utilized to place typically normative communities and master narratives – cis people, straight men, or upper-middle-class people, for example – in a subordinate position. In these cases, OOD is used to subvert and reclaim the experience of otherness (see, e.g., McKenna & Chughtai, 2020; Mehra, Merkel, & Bishop, 2004; Staszak, 2008, p. 2).

Attributes of Othering Online Discourse: Orientation and Usage

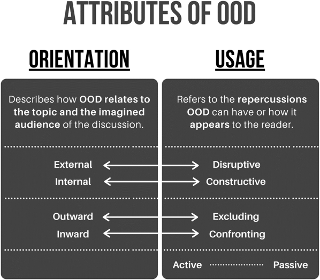

Different attributes of OOD can be divided into two categories: orientation and usage. Orientation describes how OOD relates to the topic and the imagined audience of the discussion. Usage refers to the repercussions that othering can have or how it will appear to the reader (see Fig. 13.1). The two categories also work in relation to each other. The consequences of othering and how they appear to the reader are often determined by the topic of the discussion or the imagined audience.

In this case, the definition of the imagined audience is based on the topic of the forum. For example, in a forum dedicated to the topic of patriotism, patriotic anti-immigration commenters can be seen to belong to the imagined audience, but similarly proponents of multiculturalism represent the “outsiders.” In a forum for transgender people, transgender people naturally represent the imagined audience while cis-gendered represent outsiders. In a topic-oriented forum, such as Suomi24, the imagined audience is usually more clearly defined, while in social media platforms, such as Facebook and Twitter, the imagined audience is much more ambiguous (Cook & Teasley, 2011; Litt & Hargittai, 2016; Marwick & boyd, 2014). If the OOD theory were applied to data collected from Facebook or Twitter, the researcher should be aware that definitions of the imagined audience rely heavily on their own interpretation.

Orientation of Othering Online Discourse

There are four types of orientation of OOD in relation to the topic and the audience of the discussion: external and internal; and inward and outward (see Fig. 13.2). External and internal discourses describe whether the producer of othering discourses is possibly part of the imagined audience of the forum or whether they come from outside. Inward and outward discourses, in turn, refer to the target of the othering: Inward othering is targeted to the imagined audience, and outward othering, outside the audience. Defining the boundary between outsiders and insiders may be next to impossible just by analyzing the discussion data. However, identifying the difference between internal and external discourses helps to outline the different starting points from which OOD is produced and what kind of power relations OOD creates. Positive representation of the imagined audience is more often better received, and discourses that strengthen the social cohesion of the imagined audience are more likely to resonate within the participants of the forum.

Usage of Othering Online Discourse

In addition to the previously listed orientations of OOD, there are roughly six different usages of othering: disruptive and constructive; excluding and confronting; and active and passive (see Fig. 13.3). Constructive and disruptive othering refers to whether the commenter conforms to the norms maintained by the imagined audience or whether they discursively oppose those norms. Excluding and confronting othering describe whether the target of the othering discourses is explicitly excluded from the interaction or instead explicitly addressed by the producer of the discourse. By active or passive othering, I mean whether the comment is explicitly and openly offensive or whether the discourses are more discrete and subtle in nature.

Examples of Orientation and Usage

Internal othering discourses are produced by commenters that are part of the imagined audience. Below is an excerpt from a conversation collected from the patriotism subcategory in Suomi24 forum:

… Shit talk! Europeans are being fucked again. Somalis are the most homogeneous people in Africa, all belonging to the same ethnic group. Yes, they are divided into different clans. However, is it not better that they do what they have always done? By that I mean killing each other there in the Horn of Africa instead of coming here to murder and rape us.

(Suomi24 forum, July 29, 2004)

Based on the comment, it is reasonable to assume that the author identifies themselves as a white patriot and thus belongs to the imagined audience of the forum. It is also typical that internal othering discourses in openly aggressive, racist discussion threads include few divergent opinions and the comments consist mainly of conforming to previous threads. This is not uncommon in anonymous online interaction as it is not easy to disagree with the comments made, even in faceless and low-threshold online conversations (Mikal, Rice, Kent, & Uchino, 2014). Internal othering discourses are typically produced for like-minded readers, and they are often constructive in nature. In other words, instead of dichotomy, the purpose of these internal othering discourses is to strengthen internal social cohesion and commonly shared norms of the forum. The constructive and positive effect of comments is therefore limited to within the group, as when taken out of context, the content of the comments can be vile and far from helpful.

External othering discourses come from outside the imagined audience. If the forum is meant, for example, for people inflicted by poverty, external othering discourses are produced by people who do not identify themselves poor, as in this example:

As far as I know, it takes two to make children. Don't have more children than you can afford to support! I myself have only one child and my salary is about 5200 euros a month. I pay almost two tons of tax on it, and this tax money is used to support social welfare parasites.

(Suomi24 forum, March 27, 2010)

The comment above is taken from a discussion forum on poverty and a discussion thread about a single mother's financial distress. Once again, it is impossible to say how the author identifies themselves. However, according to the comment, the author clearly places themselves above people who live on social welfare, and thus othering discourses come from outside the imagined audience. I have previously pointed out that OOD is often a group formation promoting action in some forums that foster a culture of hate and bigotry. As shown in the example above, in addition to its constructive nature, OOD can also be deliberately disruptive – especially when the commenter comes from outside the imagined audience. The above commenter emphasizes their superiority, and in no way seeks to find common ground with other users. This kind of disruptive action may also take the form of trolling, whereby the commenter may seek to shock and entertain themselves by disrupting the flow of the conversation (Hardaker, 2010, p. 238; Phillips, 2015, pp. 3, 17 & 24–25). Disruptive OOD resists the norms that are upheld by the imagined audience. For example, if the forum's shared norms encourage inclusion and diversity, disruptive OOD opposes inclusion.

When the discourse is inward, othering is directed to the imagined audience of the forum:

Poor people cannot have any relationships at all. No woman wants a poor man. I'm always alone and depressed because I'm always alone.

(Suomi24 forum, September 30, 2015)

While the commenter above acknowledges they are part of the discussion's imagined audience, they also direct their othering comment on that audience. The commenter positions themselves outside the cultural norm, where a financially high status is believed to help in finding a companion. In this example, sense of community is built through shared experience of otherness and opposing the cultural master-narrative (see, e.g., Vainikka, 2018). This kind of inward and internal OOD may foster a communal experience of otherness and can work as a form of peer support. When the othering discourses are inward and thus comments are explicitly targeted to the imagined audience of the forum, the comments are confronting.

In turn, outward discourse is directed to someone outside the imagined audience of the discussion. This may mean, for example, that in a forum for trans people, othering discourses are directed at cis-gendered people:

Cis men seem to be more phobic than women. Men are always on average more phobic/conservative in everything – our culture even requires it. After all, you are no man at all if you don't drink beer, watch hockey, and hate gays.

(Suomi24 forum, May 18, 2010)

As can be seen from the example above, norms of whiteness, straightness, and maleness can also be turned upside down in groups meant for marginalized identities. In these cases, the master-narrative that favors cis men is set to a subordinate position. Instead of just peer support, this kind of outward OOD can create a sense of empowerment in a group that shares experiences of otherness. The way the commenter above addresses cis men can also be seen as excluding othering. Excluding othering means that the commenter explicitly expresses their desire to keep the “other” out of the conversation or the commenter expresses an assumption that the other is not involved in the discussion.

Maria Ruotsalainen (2018, p. 226) has mapped the use of the term hate speech in Finnish public discourse and has found that hate speech typically reduces the other to the role of mere object instead of seeing the other as a subject. This finding aligns with these examples of confronting and excluding OOD. Regardless of whether the discourses are confronting or excluding, the other often remains merely as a static object that is described through stereotypes and inferiority.

By passive othering, I mean that its producer does not necessarily deliberately seek to offend. A person who produces passive othering can use very subtle – but still harmful – stereotypes. The feelings and emotions described in passive othering may refer to feelings such as fear, frustration, suspicion, or anxiety. By active othering I am referring in particular to comments that explicitly refer to violence, hatred, or inferiority of the other – just like in this example:

When it comes to an accident, a plane crash or a shipwreck, or even a natural disaster, most people feel some compassion for the victims. But when it comes to a ship carrying refugees, I feel only joy in the accident. The more victims, the more joyful I feel.

(Suomi24 forum, April 20, 2015)

While the commentator above describes the feelings of joy, the comment itself contains much more destructive emotions since the comment openly wishes for the death of refugees.

These attributes can work as a way to describe the othering qualities of dialogue and also as a way to decode the process of OOD in a discussion thread. They help to illuminate the social aspects of OOD and demonstrate how a single dialogue, thread, or comment can work in many ways and still contain characteristics that contribute to social injustice, discrimination, bigotry, and even violence

Conclusion

In summary, OOD is a phenomenon that is social, intersectional, and discursively diverse. OOD often exploits the public nature of online spaces to create a sense of community based on marginalization and harmful stereotypes. There is an inherent duality in the sociality of OOD. In some cases, by producing othering discourse, commenters invite like-minded people to ostracize the “other” and create social cohesion based on discrimination. In other cases, OOD takes a much more disruptive form, where othering discourses are used to disagree and possibly troll the imagined audience of the conversation. OOD can also be seen as a form of internet gatekeeping that, at its worst, dehumanizes groups that don't fit to a narrowly defined norm of whiteness and maleness. Sometimes this discursive gatekeeping takes a form of empowerment and rebellion. In those cases, the narratives against inclusion and diversity are set to an inferior position and seen as the other. At the heart of the OOD theory is the idea that while public online discussions are a powerful medium to convey identity speech and they can help to construct sameness and group identities, they can also produce inequality, hostility, marginalization, and the experience of otherness.

In this chapter, I demonstrated that by combining elements from concepts dealing with inequality and discursive exclusivity, it is possible to develop a theory that is flexible enough to examine different kinds of online othering discourses. With the examples of othering discourses, I demonstrated the attributes of OOD and showed how discursive exclusivity is created from different starting points, by different discursive means, and for different purposes. In addition to the theoretical core of OOD, the concept also has a practical side. OOD theory and especially its attributes can be utilized as an analysis model to decode and contextualize online discussion data. The attributes of OOD help to understand the relationships between the producer of othering, the target of othering, the possible purpose of the othering, and the online space that hosts the discourses. Considering these different contexts is important when identifying and, above all, preventing technology-facilitated hatred and violence.

References

Arntfield, 2015 Arntfield, M. (2015). Towards a cybervictimology: Cyberbullying, routine activities theory, and the anti-sociality of social media. Canadian Journal of Communication, 40(3), 371–388.

Atton, 2006 Atton, C. (2006). Far-right media on the internet: Culture, discourse and power. New Media & Society, 8(4), 573–587.

Back, 2002 Back, L. (2002). Aryans reading Adorno: Cyber-culture and twenty-first-century racism. Ethnic and Racial Studies, 25(4), 628–651.

Bauman and May, 2004 Bauman, Z. , & May, T. (2004). Thinking sociologically (2nd ed.). Hoboken, NJ: Blackwell Publishing Ltd.

Baumgarten, 2017 Baumgarten, N. (2017). Othering practice in a right-wing extremist online forum. language@Internet, 14(1). Retrieved from https://www.languageatinternet.org/articles/2017/baumgarten

Benjamin, 1990 Benjamin, J. (1990). An outline of intersubjectivity: The development of recognition. Psychoanalytic Psychology, 7, 33–46.

Chayko, 2002 Chayko, M. (2002). Connecting: How we form social bonds and communities in the internet age. New York, NY: State University of New York Press.

Cho et al., 2013 Cho, S. , Crenshaw, K. , & McCall, L. (2013). Toward a field of intersectionality studies: Theory, applications, and praxis. Signs, 38(4), 785–810.

Citron, 2014 Citron, D. K. (2014). Hate crimes in cyberspace. Hoboken, NJ: Harvard University Press.

Coffey and Woolworth, 2004 Coffey, B. , & Woolworth, S. (2004). “Destroy the scum, and then neuter their families”: The web forum as a vehicle for community discourse?. The Social Science Journal, 41(1), 1–14.

Cook and Teasley, 2011 Cook, E. C. , & Teasley, S. D. (2011). Beyond promotion and protection: Creators, audiences and common ground in user-generated media. In Proceedings of the 2011 iConference (pp. 41–47).

Coupland, 2010 Coupland, N. (2010). “Other” representation. In J. Jaspers , J. Verschueren , & J. O. Östman (Eds.), Society and language use (Vol. 7, pp. 241–260). Amsterdam: John Benjamins Publishing.

De Beauvoir, 1997 De Beauvoir, S. (1997). The second sex. London: Vintage [first published in French in 1949].

Delgado et al., 2017 Delgado, R. , Stefancic, J. , & Harris, A. (2017). Critical race theory: An introduction (3rd ed..). New York, NY: New York University Press.

Denzin, 1978 Denzin, N. K. (1978). The research act: A theoretical introduction to sociological methods (2nd ed.). New York, NY: McGraw-Hill.

Dervin, 2015 Dervin, F. (2015). Discourses of othering. In K. Tracy , C. Ilie & T. Sandel (Eds.), The international encyclopedia of language and social interaction (pp. 447–456). New York, NY: John Wiley & Sons.

ElSherief et al., 2018 ElSherief, M. , Kulkarni, V. , Nguyen, D. , Wang, W. Y. , & Belding, E. (2018). Hate lingo: A target-based linguistic analysis of hate speech in social media. In Twelfth International AAAI Conference on Web and Social Media .

Fairclough, 2003 Fairclough, N. (2003). Analysing discourse: Textual analysis for social research. London & New York: Routledge.

Gillespie et al., 2012 Gillespie, A. , Howarth, C. S. , & Cornish, F. (2012). Four problems for researchers using social categories. Culture & Psychology, 18(3), 391–402.

Gingrich, 2004 Gingrich, A. (2004). Conceptualizing identities. In G. Bauman and A. Gingrich (Eds.), Grammars of identity/alterity –A structural approach (pp. 3–17). Oxford: BergHahn.

Hall, 1997 Hall, S. (1997). The centrality of culture: Notes on the cultural revolutions of our time. In K. Thompson (Ed.), Media and cultural regulation (pp. 207–238). London: Sage.

Hall, 2003 Hall, S. (2003). Kulttuuri, paikka, identiteetti. In M. Lehtonen & O. Löytty (Eds.), Erilaisuus (pp. 85–128). Tampere: Vastapaino.

Hardaker, 2010 Hardaker, C. (2010). Trolling in asynchronous computer-mediated communication: From user discussions to academic definitions. Journal of Politeness Research: Language, Behavior, Culture, 6(2), 215–242.

Hinduja and Patchin, 2010 Hinduja, S. , & Patchin, J. W. (2010). Bullying, cyberbullying, and suicide. Archives of Suicide Research, 14(3), 206–221.

Hine, 2015 Hine, C. (2015). Ethnography for the internet: Embedded, embodied and everyday. London: Bloomsbury Publishing Plc.

Hmielowski et al., 2014 Hmielowski, J. D. , Hutchens, M. J. , & Cicchirillo, V. J. (2014). Living in an age of online incivility: Examining the conditional indirect effects of online discussion on political flaming. Information, Communication & Society, 17(10), 1196–1211.

Hughey and Daniels, 2013 Hughey, M. W. , & Daniels, J. (2013). Racist comments at online news sites: A methodological dilemma for discourse analysis. Media, Culture & Society, 35(3), 332–347.

Jane, 2014 Jane, E. (2014). ‘Back to the kitchen, cunt': Speaking the unspeakable about online misogyny. Continuum: Journal of Media & Cultural Studies, 28(4), 558–570.

Jemmer, 2010 Jemmer, P. (2010). The O(the)r (O)the(r). In P. Jemmer (Ed.), Engage Newcastle (Vol. 1, pp. 7–39). Newcastle: Newcastle Philosophy Society.

Jensen, 2011 Jensen, S. Q. (2011). Othering, identity formation and agency. Qualitative Studies, 2(2), 63–78.

Jick, 1979 Jick, T. D. (1979). Mixing qualitative and quantitative methods: Triangulation in action. Administrative Science Quarterly, 24(4), 602–611.

Laaksonen et al., 2017 Laaksonen, S. M. , Nelimarkka, M. , Tuokko, M. , Marttila, M. , Kekkonen, A. , & Villi, M. (2017). Working the fields of big data: Using big-data-augmented online ethnography to study candidate–candidate interaction at election time. Journal of Information Technology & Politics, 14(2), 110–131.

Lacan, 1966 Lacan, J. (1966). Écrits. Paris: Le Seuil.

Lagus et al., 2016 Lagus, K. H. , Ruckenstein, M. S. , Pantzar, M. , & Ylisiurua, M. J. (2016). SUOMI24: Muodonantoa aineistolle. Helsinki: University of Helsinki.

Litt and Hargittai, 2016 Litt, E. , & Hargittai, E. (2016). The imagined audience on social network sites. Social Media+ Society, 2(1), doi:10.1177/2056305116633482

Malmqvist, 2015 Malmqvist, K. (2015). Satire, racist humour and the power of (un) laughter: On the restrained nature of Swedish online racist discourse targeting EU-migrants begging for money. Discourse & Society, 26(6), 733–753.

Markham and Buchanan, 2012 Markham, A. , & Buchanan, E. (2012). Ethical decision-making and internet research: Version 2.0. recommendations from the AoIR ethics working committee. Retrieved from aoir.org/reports/ethics2.pdf.

Marwick and Boyd, 2014 Marwick, A. E. , & Boyd, D. (2014). Networked privacy: How teenagers negotiate context in social media. New Media & Society, 16(7), 1051–1067.

Massanari, 2017 Massanari, A. (2017). #Gamergate and the Fappening: How Reddit's algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346.

McKenna and Chughtai, 2020 McKenna, B. , & Chughtai, H. (2020). Resistance and sexuality in virtual worlds: An LGBT perspective. Computers in Human Behavior, 105, 106199.

Meddaugh and Kay, 2009 Meddaugh, P. M. , & Kay, J. (2009). Hate speech or “reasonable racism?” The other in Stormfront. Journal of Mass Media Ethics, 24(4), 251–268.

Mehra et al., 2004 Mehra, B. , Merkel, C. , & Bishop, A. P. (2004). The internet for empowerment of minority and marginalized users. New Media & Society, 6(6), 781–802.

Mikal et al., 2014 Mikal, J. P. , Rice, R. E. , Kent, R. G. , & Uchino, B. N. (2014). Common voice: Analysis of behavior modification and content convergence in a popular online community. Computers in Human Behavior, 35, 506–515.

Miles, 1989 Miles, R. (1989). Racism. London: Routledge.

Miller, 2015 Miller, T. (2015, December 17). Social or antisocial media? APPS Policy Forum. Retrieved from https://www.policyforum.net/social-or-anti-social-media/

Nuopponen, 2010 Nuopponen, A. (2010). Methods of concept analysis-a comparative study. LSP Journal-Language for special purposes, professional communication, knowledge management and cognition, 1(1), 2–14.

Okolie, 2003 Okolie, A. (2003). Introduction to the special issue – identity: Now you don't see it; now you do. Identity, 3(1), 1–7.

Papacharissi, 2004 Papacharissi, Z. (2004). Democracy online: Civility, politeness, and the democratic potential of online political discussion groups. New Media & Society, 6(2), 259–283.

Phillips, 2015 Phillips, W. (2015). This is why we can't have nice things: Mapping the relationship between online trolling and mainstream culture. Cambridge, MA: The MIT Press.

Phillips, 2019 Phillips, W. (2019). It wasn't just the trolls: Early internet culture,“fun,” and the fires of exclusionary laughter. Social Media + Society, 5(3), doi:10.1177/2056305119849493

Pickering, 2001 Pickering, M. (2001). Stereotyping: The politics of representation. Basingstoke: Palgrave.

Puusa, 2008 Puusa, A. (2008). Käsiteanalyysi tutkimusmenetelmänä. Premissi, 4(2008), 36–43.

Quayle et al., 2006 Quayle, E. , Vaughan, M. , & Taylor, M. (2006). Sex offenders, internet child abuse images and emotional avoidance: The importance of values. Aggression and Violent Behavior, 11(1), 1–11.

Ruotsalainen, 2018 Ruotsalainen, M. (2018). Tracing the concept of hate speech in Finland. Nykykulttuurin tutkimuskeskuksen julkaisuja, 122, 213–230.

Said, 1995 Said, E. (1995). Orientalism. London: Penguin Books [first published in 1978].

Staszak, 2008 Staszak, J. (2008). Other/otherness. In R. Kitchin & N. Thrift (Eds.), International encyclopedia of human geography (pp. 43–47). London: Elsevier.

Suler, 2004 Suler, J. (2004). The online disinhibition effect. CyberPsychology and Behavior, 7(3), 321–326.

Sumiala, 2012 Sumiala, J. (2012). Media and ritual: Death, community and everyday life. London: Routledge.

Suominen et al., 2019 Suominen, J. , Saarikoski, P. , & Vaahensalo, E. (2019). Digitaalisia kohtaamisia: Verkkokeskustelut BBS-purkeista sosiaaliseen mediaan. Helsinki: Gaudeamus.

Vaahensalo, 2018 Vaahensalo, E. (2018). Keskustelufoorumit mediainhokkeina–Suositut suomenkieliset keskustelufoorumit mediassa. Wider screen, 3(2018). Retrieved from http://widerscreen.fi/numerot/2018-3/keskustelufoorumit-mediainhokkeina-suositut-suomenkieliset-keskustelufoorumit-mediassa/

Vainikka, 2018 Vainikka, E. (2018). The anti-social network: Precarious life in online conversations of the socially withdrawn. European Journal of Cultural Studies. doi:10.1177/1367549418810075

Van Dijk, 2000 Van Dijk, T. A. (2000). New (s) racism: A discourse analytical approach. Ethnic minorities and the media, 37, 33–49.

Waldron, 2012 Waldron, J. (2012). The harm in hate speech. Cambridge, MA: Harvard University Press.

Walker, 1994 Walker, S. (1994). Hate speech: The history of an American controversy. Lincoln: University of Nebraska Press.

Watt et al., 2002 Watt, S. , Lea, M. , & Spears, R. (2002). How social is internet communication? A reappraisal of bandwidth and anatomy effects. In S. Woolgar (Ed.), Virtual society?: Technology, cyberbole, reality (pp. 61–77). New York: Oxford University Press.

Weaver, 2013 Weaver, S. (2013). A rhetorical discourse analysis of online anti-Muslim and anti-Semitic jokes. Ethnic and Racial Studies, 36(3), 483–499.

Wilson, 1963 Wilson, J. (1963). Thinking with concepts. Cambridge: Cambridge University Press.

Wodak, 1999 Wodak, R. (1999). Critical discourse analysis at the end of the 20th century. Research on Language and Social Interaction, 32(1–2), 185–193.

Zimmer, 2018 Zimmer, M. (2018). Addressing conceptual gaps in big data research ethics: An application of contextual integrity. Social Media+ Society, 4(2). Retrieved from https://journals.sagepub.com/doi/full/10.1177/2056305118768300

- Prelims

- Technology-Facilitated Violence and Abuse: International Perspectives and Experiences

- Section 1 TFVA Across a Spectrum of Behaviors

- Chapter 1 Introduction

- Chapter 2 Is it Actually Violence? Framing Technology-Facilitated Abuse as Violence

- Chapter 3 “Not the Real World”: Exploring Experiences of Online Abuse, Digital Dualism, and Ontological Labor

- Chapter 4 Polyvictimization in the Lives of North American Female University/College Students: The Contribution of Technology-Facilitated Abuse

- Chapter 5 The Nature of Technology-Facilitated Violence and Abuse among Young Adults in Sub-Saharan Africa

- Chapter 6 The Face of Technology-Facilitated Aggression in New Zealand: Exploring Adult Aggressors' Behaviors

- Chapter 7 The Missing and Murdered Indigenous Women Crisis: Technological Dimensions

- Chapter 8 Attending to Difference in Indigenous People's Experiences of Cyberbullying: Toward a Research Agenda

- Section 2 Text-Based Harms

- Chapter 9 Introduction

- Chapter 10 “Feminism is Eating Itself”: Women's Experiences and Perceptions of Lateral Violence Online

- Chapter 11 Claiming Victimhood: Victims of the “Transgender Agenda”

- Chapter 12 Doxxing: A Scoping Review and Typology

- Chapter 13 Creating the Other in Online Interaction: Othering Online Discourse Theory

- Chapter 14 Text-Based (Sexual) Abuse and Online Violence Against Women: Toward Law Reform?

- Section 3 Image-Based Harms

- Chapter 15 Introduction

- Chapter 16 Violence Trending: How Socially Transmitted Content of Police Misconduct Impacts Reactions toward Police Among American Youth

- Chapter 17 Just Fantasy? Online Pornography's Contribution to Experiences of Harm

- Chapter 18 Intimate Image Dissemination and Consent in a Digital Age: Perspectives from the Front Line

- Section 4 Dating Applications

- Chapter 19 Introduction

- Chapter 20 Understanding Experiences of Sexual Harms Facilitated through Dating and Hook Up Apps among Women and Girls

- Chapter 21 “That's Straight-Up Rape Culture”: Manifestations of Rape Culture on Grindr

- Chapter 22 Navigating Privacy on Gay-Oriented Mobile Dating Applications

- Section 5 Intimate Partner Violence and Digital Coercive Control

- Chapter 23 Introduction

- Chapter 24 Digital Coercive Control and Spatiality: Rural, Regional, and Remote Women's Experience

- Chapter 25 Technology-Facilitated Violence Against Women in Singapore: Key Considerations

- Chapter 26 Technology as Both a Facilitator of and Response to Youth Intimate Partner Violence: Perspectives from Advocates in the Global-South

- Chapter 27 Technology-Facilitated Domestic Abuse and Culturally and Linguistically Diverse Women in Victoria, Australia

- Section 6 Legal Responses

- Chapter 28 Introduction

- Chapter 29 Human Rights, Privacy Rights, and Technology-Facilitated Violence

- Chapter 30 Combating Cyber Violence Against Women and Girls: An Overview of the Legislative and Policy Reforms in the Arab Region

- Chapter 31 Image-Based Sexual Abuse: A Comparative Analysis of Criminal Law Approaches in Scotland and Malawi

- Chapter 32 Revenge Pornography and Rape Culture in Canada's Nonconsensual Distribution Case Law

- Chapter 33 Reasonable Expectations of Privacy in an Era of Drones and Deepfakes: Expanding the Supreme Court of Canada's Decision in R v Jarvis

- Chapter 34 Doxing and the Challenge to Legal Regulation: When Personal Data Become a Weapon

- Chapter 35 The Potential of Centralized and Statutorily Empowered Bodies to Advance a Survivor-Centered Approach to Technology-Facilitated Violence Against Women

- Section 7 Responses Beyond Law

- Chapter 36 Introduction

- Chapter 37 Technology-Facilitated Violence Against Women and Girls in Public and Private Spheres: Moving from Enemy to Ally

- Chapter 38 As Technology Evolves, so Does Domestic Violence: Modern-Day Tech Abuse and Possible Solutions

- Chapter 39 Threat Modeling Intimate Partner Violence: Tech Abuse as a Cybersecurity Challenge in the Internet of Things

- Chapter 40 Justice on the Digitized Field: Analyzing Online Responses to Technology-Facilitated Informal Justice through Social Network Analysis

- Chapter 41 Bystander Apathy and Intervention in the Era of Social Media

- Chapter 42 “I Need You All to Understand How Pervasive This Issue Is”: User Efforts to Regulate Child Sexual Offending on Social Media

- Chapter 43 Governing Image-Based Sexual Abuse: Digital Platform Policies, Tools, and Practices

- Chapter 44 Calling All Stakeholders: An Intersectoral Dialogue about Collaborating to End Tech-Facilitated Violence and Abuse

- Chapter 45 Pandemics and Systemic Discrimination: Technology-Facilitated Violence and Abuse in an Era of COVID-19 and Antiracist Protest